The EU Strikes Again As A Global Leader In Mindful Media: Updated “Code Of Practice On Disinformation”

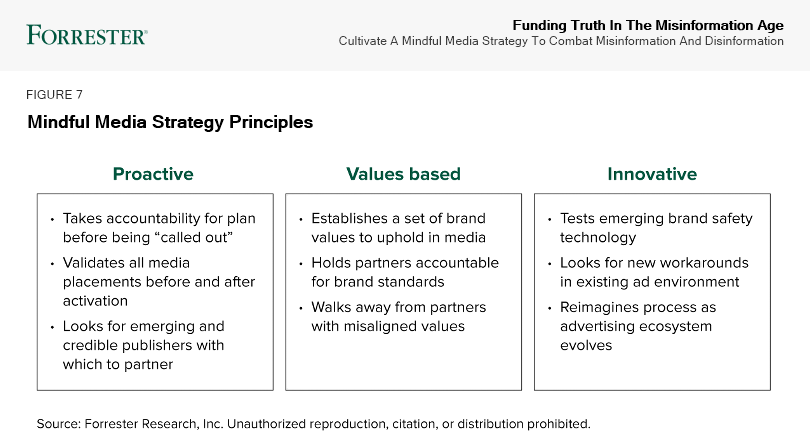

The EU announced an updated Code of Practice on Disinformation, aimed at combating the online spread of disinformation via regulatory measures, including “demonetizing the dissemination of disinformation.” Forrester’s research shows that the monetization of disinformation is a vicious cycle in which the ad supply chain infrastructure supports and funds — often inadvertently — the spread of disinformation across the open web and social media platforms. Mindful media is proactive, values-based, and innovative (see figure). The EU consistently demonstrates these qualities, from their strong and deliberate action to protect data privacy through the GDPR to this updated code, which represent a meaningful step toward defining and destroying disinformation online. These steps also show that the United States is once again playing catch-up. This code tackles several key roadblocks to disinformation prevention.

Figure — Mindful Media Principles

Stripping Disinformation Sites Of Advertising Will Transform The Supply Side

Adtech makes it relatively easy for people or groups to create sites with whatever content they please, and to monetize those sites with ads. The content could be plagiarized, propaganda, or even developed specifically to be monetized (made for AdSense or MFA sites). This updated code promises to prevent adtech from allowing disinformation sites to “benefit from advertising revenues,” and it will help defund and demonetize disinformation because:

- Blocking the tech makes disinformation harder to fund. Prior to this updated code, there has been no oversight of the adtech industry’s role in disinformation. While some companies are judicious with the websites they’re willing to monetize, others are perfectly fine with approving disinformation sites to run advertising. One of the biggest challenges for brands and agencies has been that they can try and put as much protection in place as possible, but if the supply side is still monetizing disinformation and allowing it into the inventory, it makes it impossible to block entirely.

- Demonetizing the content makes SSP incentives irrelevant. Incentives across the advertising supply chain are not set up for positive change. For supply side platforms (SSPs), more inventory means more monetizable impressions, regardless of where they come from. Advertisers must rely on the SSPs to “do the right thing” and remove “bad” inventory from their supply. We know that’s not happening, and plenty of unsuitable content is slipping through the cracks. Without this code in place, the EU is simply cutting off the head of the dragon.

- Regulation places content decisions into the hands of humans. Now that certain adtech that disseminates disinformation can be blocked and monetization limited, the decisions for how to handle questionable content are left in the hands of professionals. Media, technology, advertising professionals and individual creators (and more) will place a role in determining what content goes with what context and exercise judgement in the name of positive and lucrative audience experiences. This reintroduction of checks and balances will not be perfect, but allows communities and corporations to play a more active role in determining what content to promote.

Tangible Consequences Will Enforce Social Media’s Spotty Moderation

Most major social media platforms have some level of content moderation in place, and many of their policies include the mitigation of misinformation and disinformation. The enforcement of these policies is inconsistent, however. Despite Facebook’s policies, engineers discovered a “massive ranking failure” in the algorithm that promoted sites known for distributing misinformation, rather than downranking those sites. YouTube was seen as playing a significant role in the January 6 attack on the Capitol, when a creator connected to the Proud Boys used YouTube to amplify extremist rhetoric. Google, Meta, and Twitter, among others, have agreed to this code proposed by the EU, which signals their willingness to take greater responsibility for the harmful content that gets distributed across their platforms. The code, on its own, won’t be enough to promote change; the EU is connecting the code to the Digital Services Act, however, which incentivizes companies to follow it or risk DSA penalties.

From Privacy To Disinformation, US Regulators Are Playing Catch-Up

This isn’t the first time we’ve seen Europe outpace the United States on regulatory changes or guidance that impact advertising and marketing. In 2016, the EU introduced the GDPR, a landmark regulation protecting user data and privacy. Meanwhile, four years after the GDPR went into effect, the US still doesn’t have a federal privacy law (although the legislature just introduced a proposed bill this month). This code for disinformation is yet another example of Europe taking a proactive approach that’s paired with decisive action. Until the US follows suit, advertisers in the US will hopefully reap some of the benefits of major tech companies making changes to adhere to the EU’s code.

To hear more about our perspective on the role the advertising industry plays in funding disinformation, check out Forrester’s podcast: What It Means — Why Are Brands Funding Misinformation?