DeepSeek-R1: Why So Startled?

This blog post follows several others by my excellent colleagues at Forrester who have previously offered various approaches to thinking about, and understanding, Deepseek-R1.

Last week saw a wave of market hysteria surrounding DeepSeek’s release of an open-source model that purportedly matches GPT-4’s capabilities at a fraction of the cost. Now that the dust is settling, it’s helpful to take a step back and understand all the kerfuffle about this release with more context.

Understanding DeepSeek’s Innovations And Optimizations

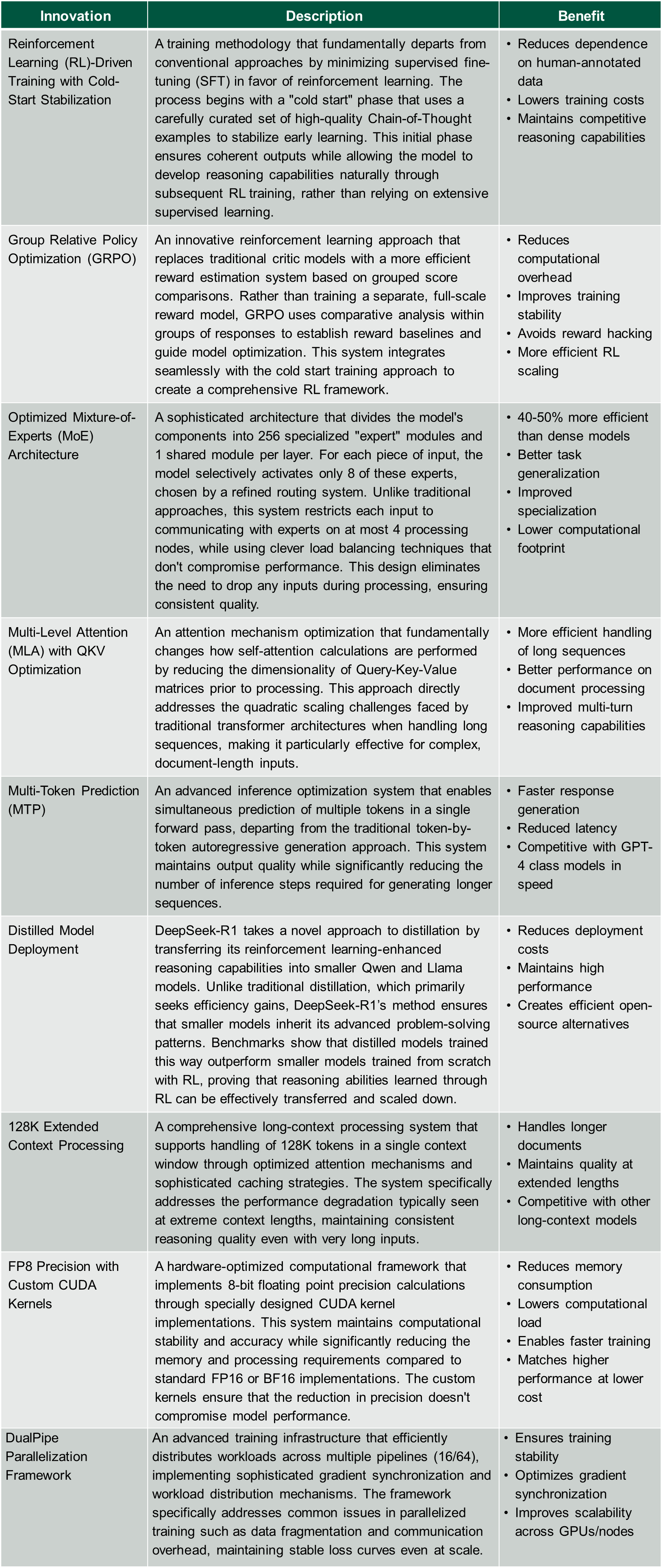

While DeepSeek’s breakthrough in cost efficiency is noteworthy, treating it as a “Sputnik moment” for AI misses a fundamental truth: Both incremental improvements and sudden breakthroughs in price-to-performance ratios are natural parts of any emerging technology’s evolution. Over the last two years, diverse research efforts have been underway across academia and commercial organizations, focused not only on enhancing reasoning performance but also on improving price-performance ratios of generative AI models. DeepSeek-R1 (and the earlier V3 model, where many of these innovations were introduced before they made their way into, or were improved in, R1) represents a significant leap forward in reducing the price-to-performance ratio through several technical innovations and optimizations that span the entire AI stack. Following is a listing of some of those innovations and their benefits:

These innovations collectively enable DeepSeek to achieve competitive performance at a fraction of the traditional cost, but these innovations are more like a series of sensible optimizations than unexpected miracles. Moreover, the MIT-licensed nature of these innovations, the relative openness of DeepSeek to sharing its approach through published papers, and the stage of innovation in the AI industry at large ensure that these innovations will cross-pollinate and be improved upon by others in the coming months. The rapid pace of AI development means that today’s cost breakthrough often becomes tomorrow’s baseline. The swift introduction of efficiency-optimized models such as Alibaba’s Qwen2.5-Max and OpenAI’s o3-mini just days after the DeepSeek-R1 announcement illustrates how DeepSeek’s cost-efficiency breakthrough is already accelerating the industry’s shift toward more resource-efficient AI development.

The Economics Of AI Will Continue To Evolve

Disruptions like DeepSeek’s breakthrough serve as a reminder that AI progress will be shaped by a mix of gradual improvements and step-function changes contributed by a broad ecosystem of startups, open-source communities, and established tech giants. Focusing too heavily on a singular breakthrough risks missing the forest for the trees. DeepSeek’s success highlights the growing importance of open-source innovation in driving down AI development costs and the potential for resource-efficient methodologies to accelerate progress. The AI revolution is a marathon, not a sprint, and this is but one leg of the race. The winners in this race will be those who can nimbly navigate an environment of perpetual disruption, not those who react with knee-jerk hysteria to every new development.